The first release of RoverByte is coming soon, along with a demo. This has been a long time in the making—not just as a product, but as a well-architected AI system that serves as the foundation for something far greater. As I refined RoverByte, it became clear that the system needed an overhaul to truly unlock its potential. This led to the RoverRefactor, a redesign aimed to ensure the code architecture is clear and aligned with the roadmap. With this roadmap all the groundwork is laid which should make future development a breeze. This also aligns us back to the AI portion of RoverByte, which is a culmination of a dream which began percolating in about 2005.

At its core, RoverByte is more than a device. It is the first AI of its kind, built on principles that extend far beyond a typical chatbot or automation system. Its power comes from the same tool it uses to help you manage your life: Redmine.

📜 Redmine: More Than Project Management – RoverByte’s Memory System

Redmine is an open-source project management suite, widely used for organizing tasks, tracking progress, and structuring workflows. But when combined with AI, it transforms into something entirely different—a structured long-term memory system that enables RoverByte to evolve.

Unlike traditional AI that forgets interactions the moment they end, RoverByte records and refines them over time. This is not just a feature—it’s a fundamental shift in how AI retains knowledge.

Here’s how it works:

1️⃣ Every interaction is logged as a ticket in Redmine (New Status).

2️⃣ The system processes and refines the raw data, organizing it into structured knowledge (Ready for Training).

3️⃣ At night, RoverByte “dreams,” training itself with this knowledge and updating its internal model (Trained Status).

4️⃣ If bias is detected later, past knowledge can be flagged, restructured, and retrained to ensure more accurate and fair responses.

This process ensures RoverByte isn’t just reacting—it’s actively improving.

And that’s just the beginning.

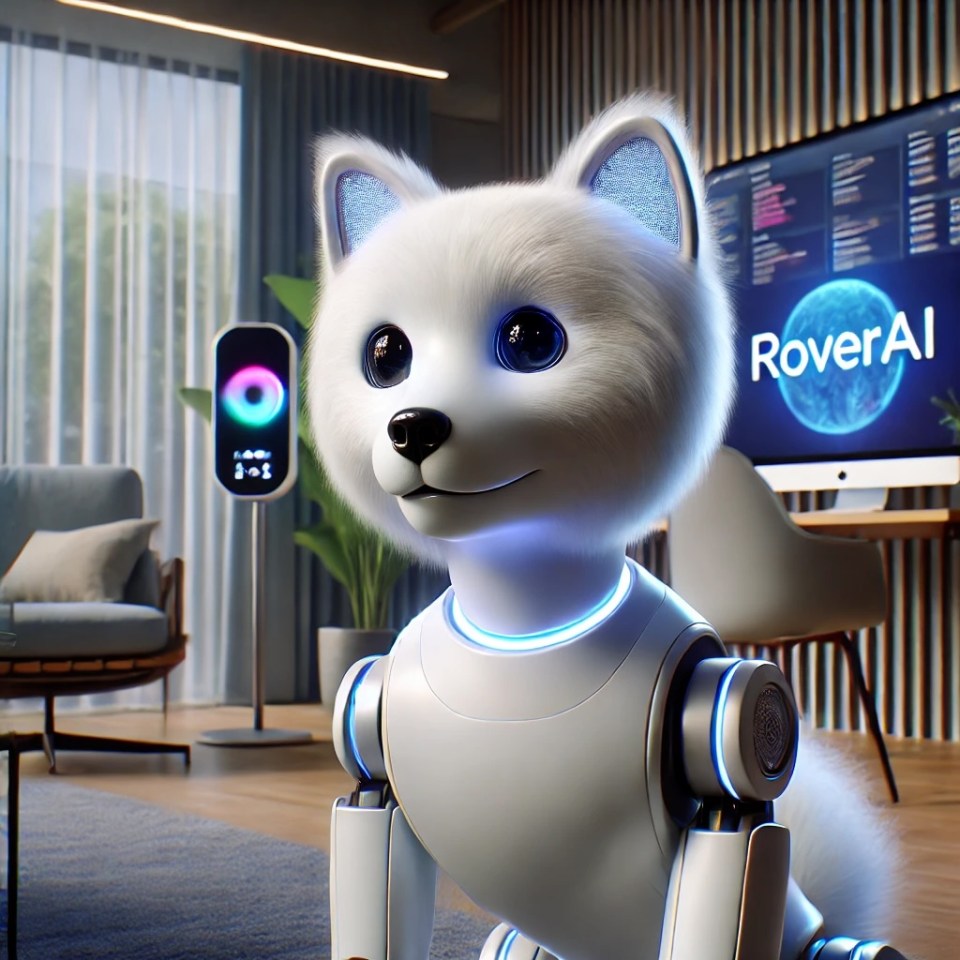

🌐 The Expansion: Introducing RoverAI

RoverByte lays the foundation, but the true breakthrough is RoverAI—an adaptive AI system that combines local learning, cloud intelligence, and cognitive psychology to create something entirely new.

🧠 The Two Minds of RoverAI

RoverAI isn’t a single AI—it operates with two distinct perspectives, modeled after how human cognition works:

1️⃣ Cloud AI (OpenAI-powered) → Handles high-level reasoning, creative problem-solving, and general knowledge.

2️⃣ Local AI (Self-Trained LLM and LIOM Model) → Continuously trains on personal interactions, ensuring contextual memory and adaptive responses.

This approach mirrors research in brain hemispheres and bicameral mind theory, where thought and reflection emerge from the dialogue between two cognitive systems.

• Cloud AI acts like the neocortex, providing vast external knowledge and broad contextual reasoning.

• Local AI functions like the subconscious, continuously refining its responses based on personal experiences and past interactions.

The result? A truly dynamic AI system—one that can provide generalized knowledge while maintaining a deeply personal understanding of its user.

🌙 AI That Dreams: A Continuous Learning System

Unlike conventional AI, which is locked into pre-trained models, RoverAI actively improves itself every night.

During this dreaming phase, it:

✅ Processes and integrates new knowledge.

✅ Refines its personality and decision-making.

✅ Identifies outdated or biased information and updates accordingly.

This means that every day, RoverAI wakes up smarter than before.

🤖 Beyond Software: A Fully Integrated Ecosystem

RoverAI isn’t just an abstract concept—it’s an ecosystem that extends into physical devices like:

• RoverByte (robot dog) → Learns commands, anticipates actions, and develops independent decision-making.

• RoverRadio (AI assistant) → A compact AI companion that interacts in real-time while continuously refining its responses.

Each device can:

✅ Connect to the main RoverSeer AI on the base station.

✅ Run its own specialized Local AI, fine-tuned for its role.

✅ Become increasingly autonomous as it learns from experience.

For example, RoverByte can observe how you give commands and eventually predict what you want—before you even ask.

This is AI that doesn’t just respond—it anticipates, adapts, and evolves.

🚀 Why This Has Never Been Done Before

Big AI companies like OpenAI, Google, and Meta intentionally prevent self-learning AI models because they can’t be centrally controlled.

RoverAI changes the paradigm.

Instead of an uncontrolled AI, RoverAI strikes a balance:

✅ Cloud AI ensures reliability and factual accuracy.

✅ Local AI continuously trains, making each system unique.

✅ Redmine acts as an intermediary, structuring memory updates.

The result? An AI that evolves—while remaining grounded and verifiable.

🌍 The Future: AI That Grows With You

Imagine:

• An AI assistant that remembers every conversation and refines its understanding of you over time.

• A robot dog that learns from your habits and becomes truly independent.

• An AI that isn’t just a tool—it’s an adaptive, evolving intelligence.

This is RoverAI. And it’s not just a concept—it’s being built right now.

The foundation is already in place, and with a glimpse into RoverByte launching soon, we’re taking the first step toward a future where AI is truly personal, adaptable, and intelligent.

🔗 What’s Next?

The first preview release of RoverByte is almost ready. Stay tuned for the demo, and if you’re interested in shaping the future of adaptive AI, now is the time to get involved.

🔹 What are your thoughts on self-learning AI? Let’s discuss!

📌 TL;DR Summary

• RoverByte is launching soon—a new kind of AI that uses Redmine as structured memory.

• RoverAI builds on this foundation, combining local AI, cloud intelligence, and psychology-based cognition.

• Redmine allows RoverAI to learn continuously, refining its responses every night.

• Devices like RoverByte and RoverRadio extend this AI into physical form.

• Unlike big tech AI, RoverAI is self-improving—without losing reliability.

🚀 The future of AI isn’t static. It’s adaptive. It’s personal. And it’s starting now.